Off-The-Record Messaging part 2: deniability and forward secrecy

15 Feb 2022

This is part 2 of a 4 part series about Off-The-Record Messaging (OTR), a cryptographic messaging protocol that protects its users’ communications even if they get hacked. In part 1 we looked at problems with common encrypted messaging protocols, such as PGP. We also considered two desirable properties for messaging protocols to have: confidentiality and authenticity. In this post (part 2) we’re going to look at two more important properties - deniability and forward secrecy - and see that many protocols fail to achieve them. We’ll then be ready to see how OTR works and how it provides each of confidentiality, authenticity, deniability and forward secrecy in parts 3 and 4.

Index

- Part 1: the problem with PGP

- Part 2: deniability and forward secrecy

- Part 3: how OTR works

- Part 4: OTR’s key insights

Deniability

Deniability is the ability for a person to credibly deny that they knew or did something. It’s one of the main focusses of OTR, but one of the main weaknesses of PGP.

Statements from in-person conversations are usually easily deniable. If you claim that I told you that I robbed a bank then I can credibly retort that I didn’t and you can’t prove otherwise.

Email conversations can be deniable too (although see below for a look into why in practice they often aren’t). Suppose that you forward to a journalist the text of an email in which I appear to describe my plans to rob a hundred banks. I can credibly claim that you edited the email or forged it from scratch, and you still can’t prove that I’m lying. The public or the police or the judge might believe your claims over mine, but nothing is mathematically provable and we’re down in the murky world of human judgement.

By contrast, we’ve seen that PGP-signed messages are not deniable. If Alice signs a message and sends it to Bob then Bob can use the PGP signature to validate that the message is authentic. However, all this validation requires is Alice’s public key, which is freely available. This means that anyone else who comes into possession of the message can also validate the signature and prove that Alice sent the message in exactly the same way that Bob did. Alice is therefore permanently on the record as having sent these messages. If Eve hacks Bob’s messages, or if Alice and Bob fall out and Bob forwards their past communication to her enemies, Alice cannot plausibly deny having sent the messages in the same way as she could if she had never signed them. If you forward to a journalist a cryptographically signed email in which I describe my plans to rob a thousand banks, I will be in a pickle.

On the face of it, deniability is in tension with authenticity. Authenticity requires that Bob can prove to himself that a message was sent by Alice. By contrast, deniability requires that Alice can deny having ever sent that same message. As we’ll see, one of the most interesting innovations of OTR is how it achieves these seemingly contradictory goals simultaneously.

Technically anything can be denied, even cryptographic signatures. I can always claim that someone stole my computer and guessed my password, or infected my computer with malware, or stole my private keys while I was letting them use my computer to look up the football scores. These claims are not impossible, but they are unlikely and tricky to argue. Deniability is a sliding scale of plausibility, and so OTR goes to great lengths to make denials more believable and therefore more plausible. “A dog ate my homework” is a much more credible excuse if you ostentatiously purchased twenty ferocious dogs the day before.

It’s perfectly reasonable to believe that a deniable message is still authentic. We all assess and believe hundreds of claims every day using vague balances of probability, without mathematical proof. Screenshots of a WhatsApp conversation might reasonably be enough to convince the authorities of my plans to rob ten thousand banks, even without cryptographic signatures.

But as we’ll now see, if signatures are available then that does make everything easier.

DKIM, the Podesta Emails, and the Hunter Biden laptop

For message senders, deniability is almost always a desirable property. There’s rarely any advantage to having everything you say or write go into an indelible record that might come back to haunt you. This is not the same thing as saying that deniability is an objectively good thing that makes the world strictly better. Just take the intertwined stories of the DKIM email verification protocol; US Democratic Party operative John Podesta; and the laptop of Hunter Biden, son of President Joe Biden.

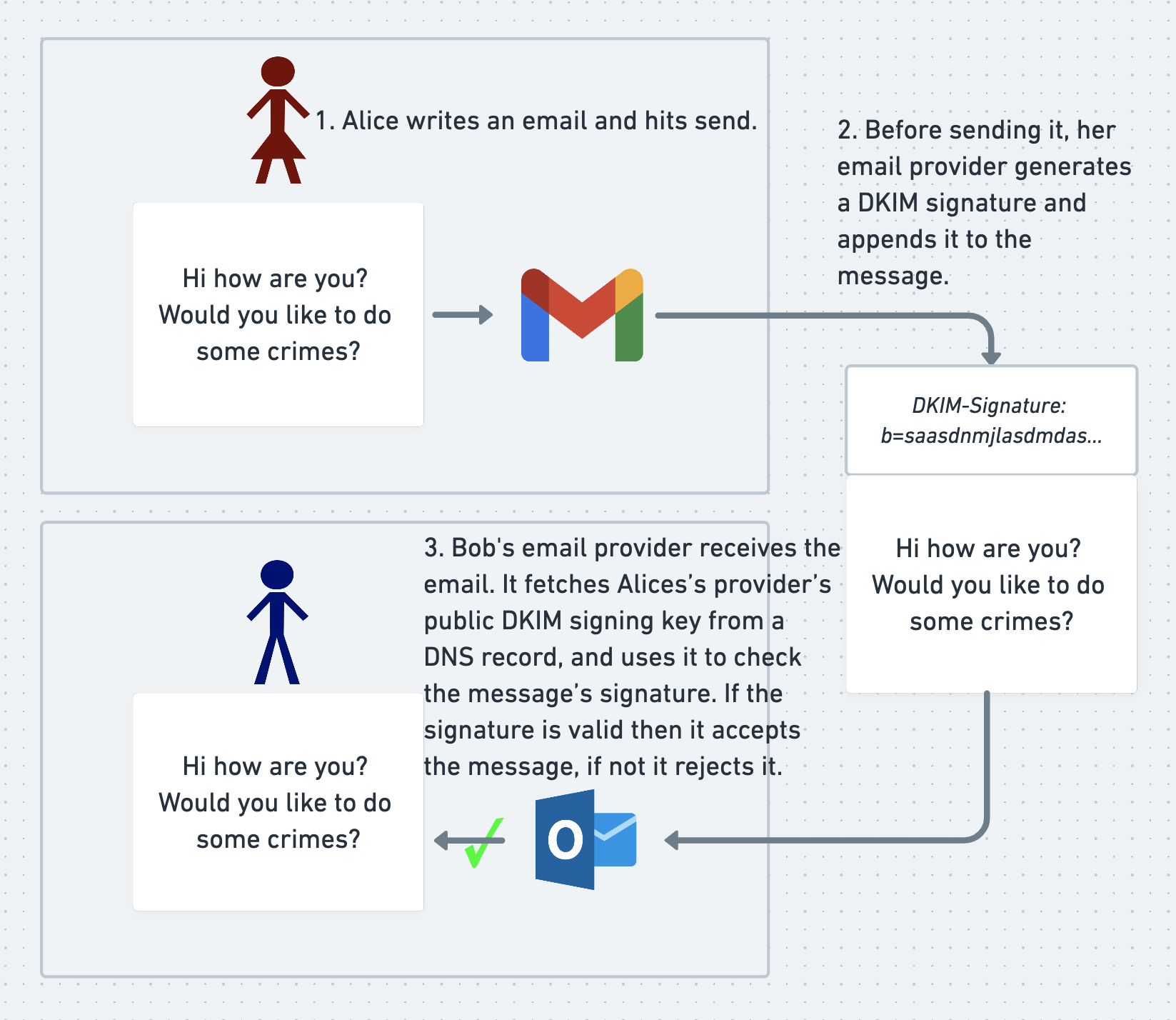

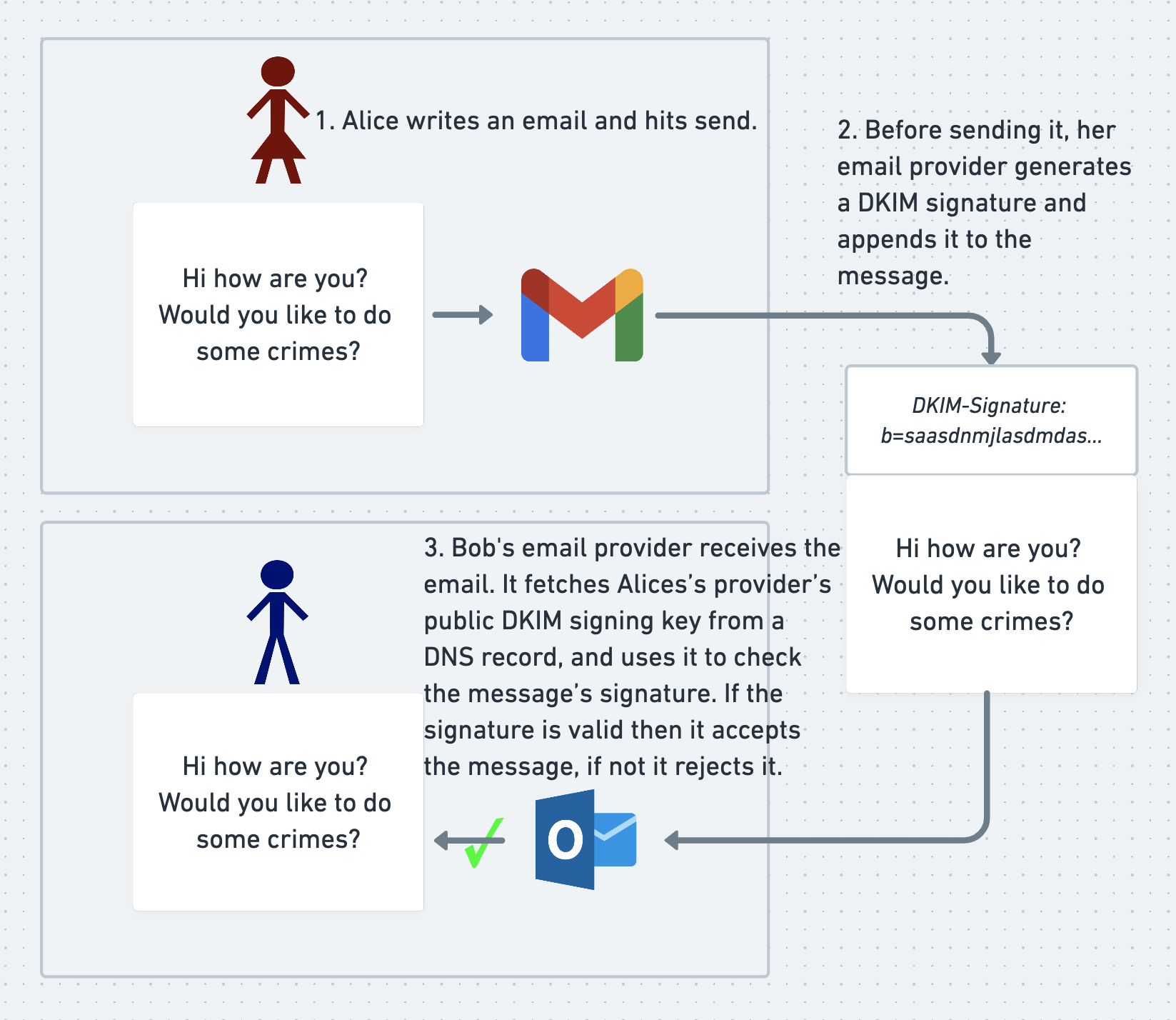

In order to understand the politics of these stories, we first need to discuss the protocols. In the old days, when an email provider received an email claiming to be from [email protected], there was no way for the provider to verify that the email really was sent by [email protected]. The provider therefore typically crossed their fingers, hoped that the email was legitimate, and accepted it. Spammers abused this trust to bombard email inboxes with forged emails. The DKIM protocol was created in 2004 to allow email providers to verify that the emails they receive are legitimate, and the protocol is still used to this day.

DKIM uses many of the same techniques as PGP. In order to use DKIM (which stands for Domain Keys Identified Mail), email providers generate a keypair and publish the public key to the world (via a DNS TXT record, although the exact mechanism is not important to us here). When a user sends an email, their email provider generates a signature for the message using the provider’s private signing key. The provider inserts this signature into the outgoing message as an email header.

When the receiver’s email provider receives the message, it looks up the sending provider’s public key and uses it to check the DKIM signature against the email’s contents, in much the same way as a recipient would check a PGP message signature against a PGP message’s contents. If the signature is valid, the receiving provider accepts the message. If it isn’t, the receiving provider assumes that the message is forged and rejects it. Since spammers don’t have access to mail providers’ signing keys, they can’t generate valid signatures. This means that they can’t generate fake emails that pass DKIM verification, making DKIM very good at detecting and preventing email forgery.

But as we’ve seen with PGP, where there’s a cryptographic signature, there might also be a problem with deniability. As well as providing authentication, a DKIM signature provides permanent, undeniable proof that the signed message is authentic. DKIM signatures are part of the contents of an email, so they are saved in the recipient’s inbox. If a hacker steals all the emails from an inbox, they can validate the DKIM signatures themselves using the sending provider’s public DKIM key, in the same way that the recipient’s email provider did when it received the message. The attacker already knows that the emails are legitimate, since they stole them with their own two hands. However, DKIM signatures allow them to prove this fact to a sceptical third-party as well, obliterating the emails’ deniability. Email providers change, or rotate, their DKIM keys regularly, which means that the public key currently in their DNS record may be different to the key used to sign the message. Fortunately for the attacker, historical DKIM public keys for many large mail providers can easily be found on the internet.

Matthew Green, a professor at Johns Hopkins University, points out that making emails non-repudiatable in this way is not one of the goals of the DKIM protocol. Rather, it’s an odd side-effect that wasn’t contemplated when DKIM was originally designed and deployed. Green argues that DKIM signatures make email hacking a much more lucrative pursuit. It’s hard for a journalist or a foreign government to trust a stolen dump of unsigned emails sent to them by an associate of an associate of an associate, since any of the people in this long chain of associates could have faked or embellished the emails’ contents. However, if the emails contain cryptographic DKIM signatures generated by trustworthy third parties (such as a reputable email provider), then the emails are provably real, no matter how questionable the character who gave them to you. Cryptographic signatures don’t decay with social distance or sordidness. Data thieves are able to piggy-back off of Gmail’s (or any other DKIM signer’s) credibility, making stolen, signed emails a verifiable and therefore more valuable commodity.

This causes problems in the real world. In March 2016, Wikileaks published a dump of emails hacked from the Gmail account of John Podesta, a US Democratic Party operative. Alongside each email Wikileaks published the corresponding DKIM signature, generated by Gmail or whichever provider sent the email. This allowed independent verification of the messages, which prevented Podesta from claiming that the emails were nonsense fabricated by liars.

You may think that the Podesta hack was, in itself, a good thing for democracy, or a terrible thing for a private citizen. You may believe that the long-term verifiability of DKIM signatures is a societal virtue that increases transparency, or a blunder that incentivizes email hacking. But whatever your opinions, you’d have to agree that John Podesta definitely wishes that his emails didn’t have long-lived proofs of their provenance, and that most individual email users would like their own messages to be sent deniably and off-the-record.

Matthew Green has a counter-intuitive but elegant solution to this problem. Google already regularly rotate their DKIM keys. They do this as a best-practice precaution, in case the keys have been compromised without Google realizing. Green proposes that once Google (and other mail providers) have rotated a DKIM keypair and taken it out of service, they should publicly publish the keypair’s private key.

This sounds odd. Publishing an DKIM private key means that anyone can use it to spoof a valid-looking DKIM signature for an email. If the keypair were still in use, this would make Google’s DKIM signatures useless. However, since the keypair has been retired, revealing the private key does not jeopardize the effectiveness of DKIM in any way. The purpose of DKIM is to allow email recipients to verify the authenticity of a message at the time they receive that message. Once the recipient has verified and accepted the message, they don’t need to re-verify it in the future. The signature has no further use unless someone - such as an attacker - wants to later prove the provenance of an email.

Since DKIM only requires signatures to be valid and verifiable when the email is received, it doesn’t matter if an attacker can spoof a signature for an old email. In fact it’s desirable, because it renders worthless all DKIM signatures that were legitimately generated by the email provider using the now-public private key. Suppose that an attacker has stolen an old email dump containing many emails sent by Gmail, complete with DKIM signatures generated using Gmail’s now-public private key. Previously, only Google could have generated these signatures, and so the signatures proved that the emails were genuine. However, since the old private key is now public, anyone can use it to generate valid signatures themselves. All a forger has to do is take the now-public private DKIM key of the email provider, plus the contents of the email that they want to sign. They use the key to sign the email, in much the same way as they would sign a PGP message. Finally, they add the resulting signature as an email header, just as the email provider would.

This means that unless an attacker with a stolen batch of signed emails can prove that their signatures were generated while the key was still private (which in general they won’t be able to), the signatures don’t prove anything about anything to a sceptical third party. This applies even if the attacker really did steal the emails and is engaging in only a single layer of simple malfeasance.

But how much difference does any of this really make? Wouldn’t everyone have believed the Podesta Emails anyway, without the signatures? Possibly. But consider a more recent example in which I think that DKIM signatures could have changed the course of history, had they been available.

During the 2020 US election campaign, Republican operatives claimed to have gained access to a laptop belonging to Hunter Biden, son of the then-Democratic candidate and now-President Joe Biden. The Republicans claimed that this laptop contained gobs of explosive emails and information about the Bidens that would shock the public.

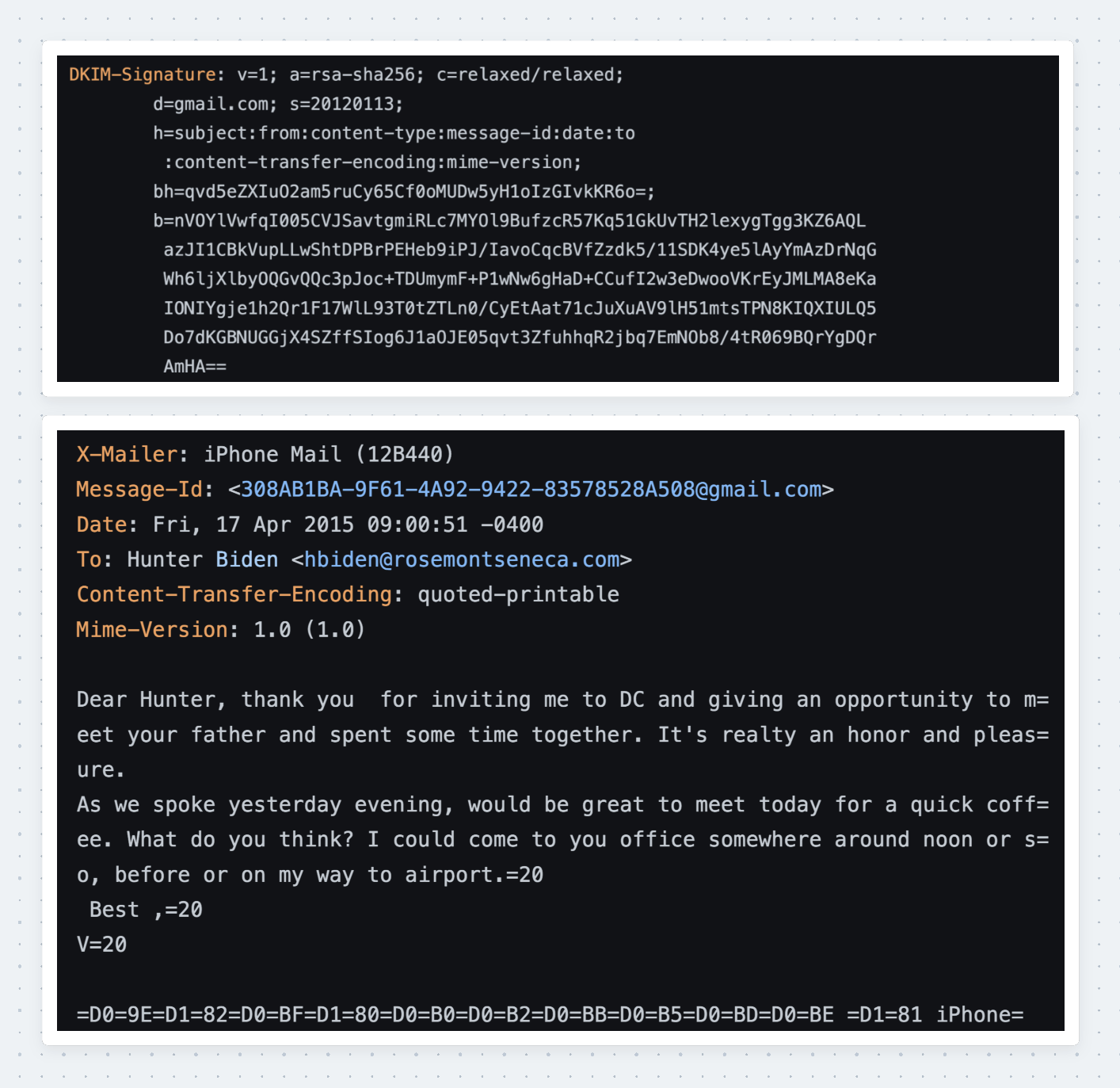

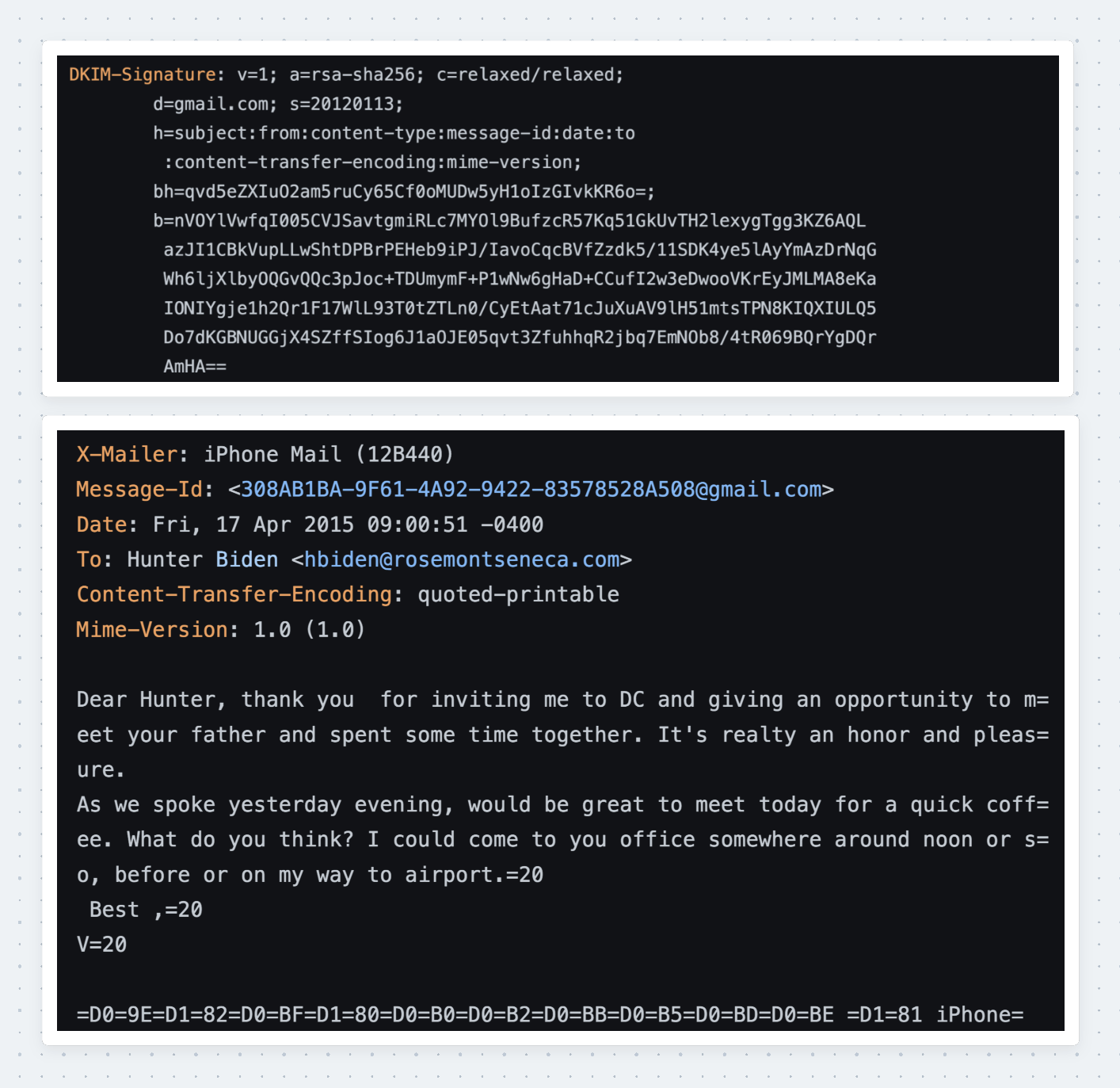

The story of how the Republicans allegedly got hold of this laptop is somewhat fantastical, winding by way of a computer repair shop in a small town in Delaware. However, fantastical stories are sometimes true, and this is exactly the type of situation in which cryptographic signatures could play a big role in establishing credibility. It doesn’t matter how wild the story is if the signatures validate. Indeed, in an effort to prove the laptop’s provenance, Republicans released a single email, with a single DKIM signature, that they claimed came from the laptop.

The DKIM signature of this email is valid, and when combined with the email body (not shown above) it does indeed prove that [email protected] sent an email to [email protected] about meeting the recipient’s father, sometime between 2012 and 2015 (the period for which the DKIM signing key was valid). However, it also raises a lot of questions, and provides a perfect example of the sliding-scale nature of deniability.

The email doesn’t prove anything more than what it says. It doesn’t prove who [email protected] is (although other evidence might). It doesn’t prove that there are thousands more like it, and it doesn’t prove that the email came from the alleged laptop.

The email is cryptographically verifiable, but without further evidence the associated story is still plausibly deniable. I suspect that if a full dump of emails and signatures had been released, a la Wikileaks and Podesta, then their combined weight could have been enough to overcome many circumstantial denials. If the Republicans were also sitting on a stack of additional, also-cryptographically-verifiable material then it’s hard to understand why they chose to release only this single, not-particularly-incendiary example. Maybe the 2020 election could have gone a different way. Cryptographic deniability is important.

You can’t deny that we’ve studied deniability in depth, so now let’s look at the other new property that Off-The-Record-Messaging provides: forward secrecy.

Forward Secrecy

Alice and Bob are once again exchanging encrypted messages over a network connection. Eve is intercepting their traffic, but since their messages are encrypted she can’t read them. Nonetheless, Eve decides to store Alice and Bob’s encrypted traffic, just in case she can make use of it in the future.

A year later, Eve compromises one or both of Alice and Bob’s private keys. For many encryption protocols, Eve would be able to go back to her archives and use her newly stolen keys to decrypt all their encrypted messages that she has stored over the years. This would be a disaster. However, if Alice and Bob were using an encryption protocol with the remarkable property of forward secrecy then Eve would not be able to decrypt their stored historical traffic, even though she has access to their private keys.

Here’s Wikipedia’s definition of forward secrecy:

Forward Secrecy is a feature of specific key agreement protocols that gives assurances your session keys will not be compromised even if the private key of the server is compromised. (Wikipedia)

We’ve seen in part 1 that PGP does not give forward secrecy. Suppose that Eve stores encrypted PGP communication and later compromises Bob’s private key. Eve can now use Bob’s private key to decrypt all of the historical session keys that Alice encrypted using Bob’s public key. This means that she can use those session keys to decrypt all of Alice’s corresponding messages, long after they were sent.

To prevent this from happening, Alice and Bob need a session key exchange process that prevents Eve from working out the value of the key, even if she watches all of their key-exchange traffic, and even if she compromises their private keys. The original version of OTR achieved this remarkable property using a key-exchange protocol called Diffie-Hellman (other similar protocols would also work), which we’ll look at more later.

To complete their guarantee of forward secrecy, Alice and Bob need to “forget” each session key as soon as they are done with it, wiping it from their RAM, disk, and anywhere else from which an attacker who compromises their computers might be able to recover it. If Alice and Bob agree their session keys using a carefully designed key-exchange protocol like Diffie-Hellman and fully forget them once they’re no longer needed, then there is no way for anyone to work out what the values of those session keys were; even the attacker; and even Alice and Bob. There is therefore no way, in any circumstances, for anyone to decrypt traffic or encrypted message dumps that were encrypted using one of these forgotten session keys. Forward secrecy, and with it one of the primary goals of OTR, is achieved.

Forward secrecy is often called “perfect forward secrecy”, including by many very eminent cryptographers. Other, similarly eminent cryptographers object to the word “perfect” because (my paraphrasing) it promises too much. Nothing is perfect, and even when a forward secrecy protocol is correctly implemented, its guarantees are not perfect until the sender and receiver have finished forgetting a session key. Between the times at which a message is encrypted and the key is forgotten lies a window of opportunity for a sufficiently advanced attacker to steal the session key from the participants’ RAM or anywhere else they might have stored it. In practice the guarantees of forward secrecy are still strong and remarkable. But when discussing Off-The-Record Messaging, a protocol whose entire reason for existence is to be robust to failures, these are exactly the kinds of edge-cases we should contemplate. We’ll talk about this more in later sections.

It’s clear why confidentiality and authenticity are desirable properties for a secure messaging protocol. Confidentiality prevents attackers from reading Alice and Bob’s messages, while authenticity allows Alice and Bob to be confident that they are each talking to the right person and that their messages have not been tampered with.

We’ve also seen why deniability and forward secrecy are important. Deniability allows participants to credibly claim not to have sent messages in the event of a compromise. This replicates the privacy properties of real-life conversation and makes the fallout of a data theft less harmful. Forward secrecy, the other property that we discussed, prevents attackers from reading historical encrypted messages, even if they compromise the long-lived private keys used at the base of the encryption process.

The goal of OTR is to achieve all of these properties simultaneously, and now we’re ready to see how it does this. In part 3 of this series we’ll look at how an OTR exchange works, then in part 4 we’ll delve into its design decisions.

Index

This is part 2 of a 4 part series about Off-The-Record Messaging (OTR), a cryptographic messaging protocol that protects its users’ communications even if they get hacked. In part 1 we looked at problems with common encrypted messaging protocols, such as PGP. We also considered two desirable properties for messaging protocols to have: confidentiality and authenticity. In this post (part 2) we’re going to look at two more important properties - deniability and forward secrecy - and see that many protocols fail to achieve them. We’ll then be ready to see how OTR works and how it provides each of confidentiality, authenticity, deniability and forward secrecy in parts 3 and 4.

Index

- Part 1: the problem with PGP

- Part 2: deniability and forward secrecy

- Part 3: how OTR works

- Part 4: OTR’s key insights

Deniability

Deniability is the ability for a person to credibly deny that they knew or did something. It’s one of the main focusses of OTR, but one of the main weaknesses of PGP.

Statements from in-person conversations are usually easily deniable. If you claim that I told you that I robbed a bank then I can credibly retort that I didn’t and you can’t prove otherwise.

Email conversations can be deniable too (although see below for a look into why in practice they often aren’t). Suppose that you forward to a journalist the text of an email in which I appear to describe my plans to rob a hundred banks. I can credibly claim that you edited the email or forged it from scratch, and you still can’t prove that I’m lying. The public or the police or the judge might believe your claims over mine, but nothing is mathematically provable and we’re down in the murky world of human judgement.

By contrast, we’ve seen that PGP-signed messages are not deniable. If Alice signs a message and sends it to Bob then Bob can use the PGP signature to validate that the message is authentic. However, all this validation requires is Alice’s public key, which is freely available. This means that anyone else who comes into possession of the message can also validate the signature and prove that Alice sent the message in exactly the same way that Bob did. Alice is therefore permanently on the record as having sent these messages. If Eve hacks Bob’s messages, or if Alice and Bob fall out and Bob forwards their past communication to her enemies, Alice cannot plausibly deny having sent the messages in the same way as she could if she had never signed them. If you forward to a journalist a cryptographically signed email in which I describe my plans to rob a thousand banks, I will be in a pickle.

On the face of it, deniability is in tension with authenticity. Authenticity requires that Bob can prove to himself that a message was sent by Alice. By contrast, deniability requires that Alice can deny having ever sent that same message. As we’ll see, one of the most interesting innovations of OTR is how it achieves these seemingly contradictory goals simultaneously.

Technically anything can be denied, even cryptographic signatures. I can always claim that someone stole my computer and guessed my password, or infected my computer with malware, or stole my private keys while I was letting them use my computer to look up the football scores. These claims are not impossible, but they are unlikely and tricky to argue. Deniability is a sliding scale of plausibility, and so OTR goes to great lengths to make denials more believable and therefore more plausible. “A dog ate my homework” is a much more credible excuse if you ostentatiously purchased twenty ferocious dogs the day before.

It’s perfectly reasonable to believe that a deniable message is still authentic. We all assess and believe hundreds of claims every day using vague balances of probability, without mathematical proof. Screenshots of a WhatsApp conversation might reasonably be enough to convince the authorities of my plans to rob ten thousand banks, even without cryptographic signatures.

But as we’ll now see, if signatures are available then that does make everything easier.

DKIM, the Podesta Emails, and the Hunter Biden laptop

For message senders, deniability is almost always a desirable property. There’s rarely any advantage to having everything you say or write go into an indelible record that might come back to haunt you. This is not the same thing as saying that deniability is an objectively good thing that makes the world strictly better. Just take the intertwined stories of the DKIM email verification protocol; US Democratic Party operative John Podesta; and the laptop of Hunter Biden, son of President Joe Biden.

In order to understand the politics of these stories, we first need to discuss the protocols. In the old days, when an email provider received an email claiming to be from [email protected], there was no way for the provider to verify that the email really was sent by [email protected]. The provider therefore typically crossed their fingers, hoped that the email was legitimate, and accepted it. Spammers abused this trust to bombard email inboxes with forged emails. The DKIM protocol was created in 2004 to allow email providers to verify that the emails they receive are legitimate, and the protocol is still used to this day.

DKIM uses many of the same techniques as PGP. In order to use DKIM (which stands for Domain Keys Identified Mail), email providers generate a keypair and publish the public key to the world (via a DNS TXT record, although the exact mechanism is not important to us here). When a user sends an email, their email provider generates a signature for the message using the provider’s private signing key. The provider inserts this signature into the outgoing message as an email header.

When the receiver’s email provider receives the message, it looks up the sending provider’s public key and uses it to check the DKIM signature against the email’s contents, in much the same way as a recipient would check a PGP message signature against a PGP message’s contents. If the signature is valid, the receiving provider accepts the message. If it isn’t, the receiving provider assumes that the message is forged and rejects it. Since spammers don’t have access to mail providers’ signing keys, they can’t generate valid signatures. This means that they can’t generate fake emails that pass DKIM verification, making DKIM very good at detecting and preventing email forgery.

But as we’ve seen with PGP, where there’s a cryptographic signature, there might also be a problem with deniability. As well as providing authentication, a DKIM signature provides permanent, undeniable proof that the signed message is authentic. DKIM signatures are part of the contents of an email, so they are saved in the recipient’s inbox. If a hacker steals all the emails from an inbox, they can validate the DKIM signatures themselves using the sending provider’s public DKIM key, in the same way that the recipient’s email provider did when it received the message. The attacker already knows that the emails are legitimate, since they stole them with their own two hands. However, DKIM signatures allow them to prove this fact to a sceptical third-party as well, obliterating the emails’ deniability. Email providers change, or rotate, their DKIM keys regularly, which means that the public key currently in their DNS record may be different to the key used to sign the message. Fortunately for the attacker, historical DKIM public keys for many large mail providers can easily be found on the internet.

Matthew Green, a professor at Johns Hopkins University, points out that making emails non-repudiatable in this way is not one of the goals of the DKIM protocol. Rather, it’s an odd side-effect that wasn’t contemplated when DKIM was originally designed and deployed. Green argues that DKIM signatures make email hacking a much more lucrative pursuit. It’s hard for a journalist or a foreign government to trust a stolen dump of unsigned emails sent to them by an associate of an associate of an associate, since any of the people in this long chain of associates could have faked or embellished the emails’ contents. However, if the emails contain cryptographic DKIM signatures generated by trustworthy third parties (such as a reputable email provider), then the emails are provably real, no matter how questionable the character who gave them to you. Cryptographic signatures don’t decay with social distance or sordidness. Data thieves are able to piggy-back off of Gmail’s (or any other DKIM signer’s) credibility, making stolen, signed emails a verifiable and therefore more valuable commodity.

This causes problems in the real world. In March 2016, Wikileaks published a dump of emails hacked from the Gmail account of John Podesta, a US Democratic Party operative. Alongside each email Wikileaks published the corresponding DKIM signature, generated by Gmail or whichever provider sent the email. This allowed independent verification of the messages, which prevented Podesta from claiming that the emails were nonsense fabricated by liars.

You may think that the Podesta hack was, in itself, a good thing for democracy, or a terrible thing for a private citizen. You may believe that the long-term verifiability of DKIM signatures is a societal virtue that increases transparency, or a blunder that incentivizes email hacking. But whatever your opinions, you’d have to agree that John Podesta definitely wishes that his emails didn’t have long-lived proofs of their provenance, and that most individual email users would like their own messages to be sent deniably and off-the-record.

Matthew Green has a counter-intuitive but elegant solution to this problem. Google already regularly rotate their DKIM keys. They do this as a best-practice precaution, in case the keys have been compromised without Google realizing. Green proposes that once Google (and other mail providers) have rotated a DKIM keypair and taken it out of service, they should publicly publish the keypair’s private key.

This sounds odd. Publishing an DKIM private key means that anyone can use it to spoof a valid-looking DKIM signature for an email. If the keypair were still in use, this would make Google’s DKIM signatures useless. However, since the keypair has been retired, revealing the private key does not jeopardize the effectiveness of DKIM in any way. The purpose of DKIM is to allow email recipients to verify the authenticity of a message at the time they receive that message. Once the recipient has verified and accepted the message, they don’t need to re-verify it in the future. The signature has no further use unless someone - such as an attacker - wants to later prove the provenance of an email.

Since DKIM only requires signatures to be valid and verifiable when the email is received, it doesn’t matter if an attacker can spoof a signature for an old email. In fact it’s desirable, because it renders worthless all DKIM signatures that were legitimately generated by the email provider using the now-public private key. Suppose that an attacker has stolen an old email dump containing many emails sent by Gmail, complete with DKIM signatures generated using Gmail’s now-public private key. Previously, only Google could have generated these signatures, and so the signatures proved that the emails were genuine. However, since the old private key is now public, anyone can use it to generate valid signatures themselves. All a forger has to do is take the now-public private DKIM key of the email provider, plus the contents of the email that they want to sign. They use the key to sign the email, in much the same way as they would sign a PGP message. Finally, they add the resulting signature as an email header, just as the email provider would.

This means that unless an attacker with a stolen batch of signed emails can prove that their signatures were generated while the key was still private (which in general they won’t be able to), the signatures don’t prove anything about anything to a sceptical third party. This applies even if the attacker really did steal the emails and is engaging in only a single layer of simple malfeasance.

But how much difference does any of this really make? Wouldn’t everyone have believed the Podesta Emails anyway, without the signatures? Possibly. But consider a more recent example in which I think that DKIM signatures could have changed the course of history, had they been available.

During the 2020 US election campaign, Republican operatives claimed to have gained access to a laptop belonging to Hunter Biden, son of the then-Democratic candidate and now-President Joe Biden. The Republicans claimed that this laptop contained gobs of explosive emails and information about the Bidens that would shock the public.

The story of how the Republicans allegedly got hold of this laptop is somewhat fantastical, winding by way of a computer repair shop in a small town in Delaware. However, fantastical stories are sometimes true, and this is exactly the type of situation in which cryptographic signatures could play a big role in establishing credibility. It doesn’t matter how wild the story is if the signatures validate. Indeed, in an effort to prove the laptop’s provenance, Republicans released a single email, with a single DKIM signature, that they claimed came from the laptop.

The DKIM signature of this email is valid, and when combined with the email body (not shown above) it does indeed prove that [email protected] sent an email to [email protected] about meeting the recipient’s father, sometime between 2012 and 2015 (the period for which the DKIM signing key was valid). However, it also raises a lot of questions, and provides a perfect example of the sliding-scale nature of deniability.

The email doesn’t prove anything more than what it says. It doesn’t prove who [email protected] is (although other evidence might). It doesn’t prove that there are thousands more like it, and it doesn’t prove that the email came from the alleged laptop.

The email is cryptographically verifiable, but without further evidence the associated story is still plausibly deniable. I suspect that if a full dump of emails and signatures had been released, a la Wikileaks and Podesta, then their combined weight could have been enough to overcome many circumstantial denials. If the Republicans were also sitting on a stack of additional, also-cryptographically-verifiable material then it’s hard to understand why they chose to release only this single, not-particularly-incendiary example. Maybe the 2020 election could have gone a different way. Cryptographic deniability is important.

You can’t deny that we’ve studied deniability in depth, so now let’s look at the other new property that Off-The-Record-Messaging provides: forward secrecy.

Forward Secrecy

Alice and Bob are once again exchanging encrypted messages over a network connection. Eve is intercepting their traffic, but since their messages are encrypted she can’t read them. Nonetheless, Eve decides to store Alice and Bob’s encrypted traffic, just in case she can make use of it in the future.

A year later, Eve compromises one or both of Alice and Bob’s private keys. For many encryption protocols, Eve would be able to go back to her archives and use her newly stolen keys to decrypt all their encrypted messages that she has stored over the years. This would be a disaster. However, if Alice and Bob were using an encryption protocol with the remarkable property of forward secrecy then Eve would not be able to decrypt their stored historical traffic, even though she has access to their private keys.

Here’s Wikipedia’s definition of forward secrecy:

Forward Secrecy is a feature of specific key agreement protocols that gives assurances your session keys will not be compromised even if the private key of the server is compromised. (Wikipedia)

We’ve seen in part 1 that PGP does not give forward secrecy. Suppose that Eve stores encrypted PGP communication and later compromises Bob’s private key. Eve can now use Bob’s private key to decrypt all of the historical session keys that Alice encrypted using Bob’s public key. This means that she can use those session keys to decrypt all of Alice’s corresponding messages, long after they were sent.

To prevent this from happening, Alice and Bob need a session key exchange process that prevents Eve from working out the value of the key, even if she watches all of their key-exchange traffic, and even if she compromises their private keys. The original version of OTR achieved this remarkable property using a key-exchange protocol called Diffie-Hellman (other similar protocols would also work), which we’ll look at more later.

To complete their guarantee of forward secrecy, Alice and Bob need to “forget” each session key as soon as they are done with it, wiping it from their RAM, disk, and anywhere else from which an attacker who compromises their computers might be able to recover it. If Alice and Bob agree their session keys using a carefully designed key-exchange protocol like Diffie-Hellman and fully forget them once they’re no longer needed, then there is no way for anyone to work out what the values of those session keys were; even the attacker; and even Alice and Bob. There is therefore no way, in any circumstances, for anyone to decrypt traffic or encrypted message dumps that were encrypted using one of these forgotten session keys. Forward secrecy, and with it one of the primary goals of OTR, is achieved.

Forward secrecy is often called “perfect forward secrecy”, including by many very eminent cryptographers. Other, similarly eminent cryptographers object to the word “perfect” because (my paraphrasing) it promises too much. Nothing is perfect, and even when a forward secrecy protocol is correctly implemented, its guarantees are not perfect until the sender and receiver have finished forgetting a session key. Between the times at which a message is encrypted and the key is forgotten lies a window of opportunity for a sufficiently advanced attacker to steal the session key from the participants’ RAM or anywhere else they might have stored it. In practice the guarantees of forward secrecy are still strong and remarkable. But when discussing Off-The-Record Messaging, a protocol whose entire reason for existence is to be robust to failures, these are exactly the kinds of edge-cases we should contemplate. We’ll talk about this more in later sections.

It’s clear why confidentiality and authenticity are desirable properties for a secure messaging protocol. Confidentiality prevents attackers from reading Alice and Bob’s messages, while authenticity allows Alice and Bob to be confident that they are each talking to the right person and that their messages have not been tampered with.

We’ve also seen why deniability and forward secrecy are important. Deniability allows participants to credibly claim not to have sent messages in the event of a compromise. This replicates the privacy properties of real-life conversation and makes the fallout of a data theft less harmful. Forward secrecy, the other property that we discussed, prevents attackers from reading historical encrypted messages, even if they compromise the long-lived private keys used at the base of the encryption process.

The goal of OTR is to achieve all of these properties simultaneously, and now we’re ready to see how it does this. In part 3 of this series we’ll look at how an OTR exchange works, then in part 4 we’ll delve into its design decisions.