Off-The-Record Messaging part 4: key insights

15 Feb 2022

This is the final part in a 4 part series about Off-The-Record Messaging (OTR), a cryptographic messaging protocol that protects its users’ communications even if they get hacked. In parts 1 and 2 we looked at problems with common encrypted messaging protocols, such as PGP. We considered four desirable properties of encrypted messaging protocols: confidentiality, authenticity, deniability, and forward secrecy. In part 3 we saw how OTR works and how it provides each of these properties, and in this final part we’ll delve into some of the protocol’s key insights.

Index

- Part 1: the problem with PGP

- Part 2: deniability and forward secrecy

- Part 3: how OTR works

- Part 4: OTR’s key insights

Why does OTR use symmetric signatures instead of asymmetric ones?

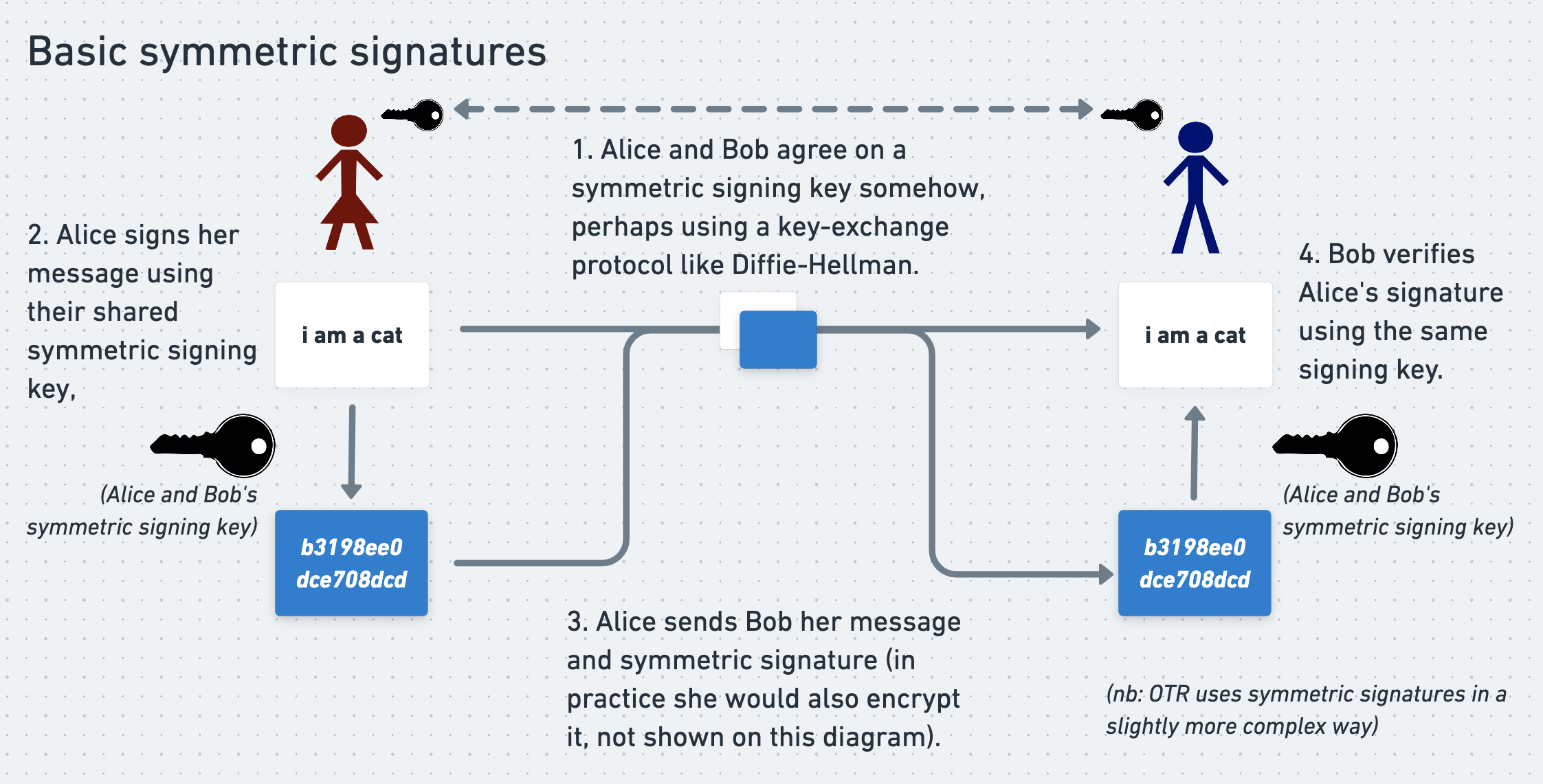

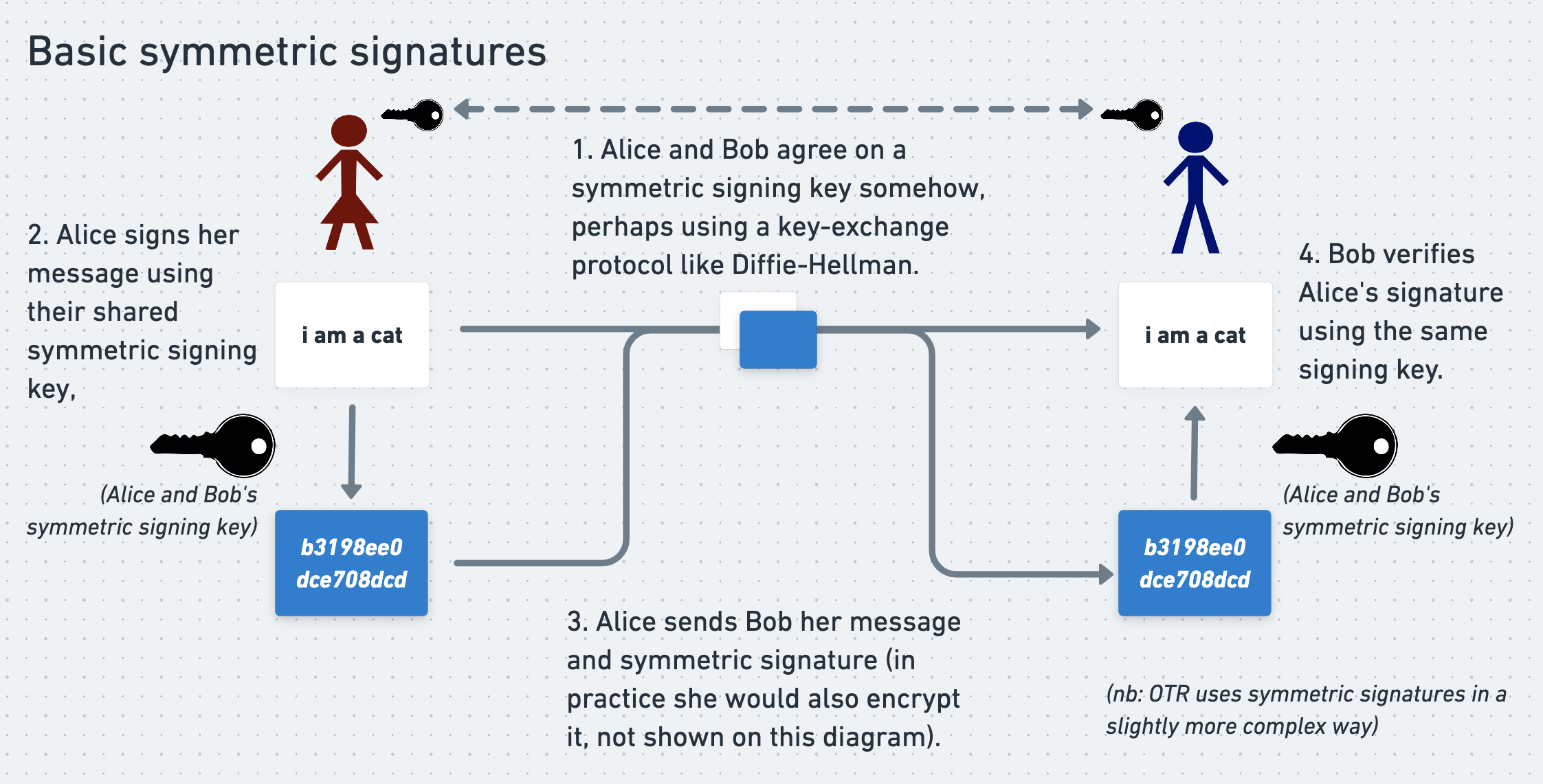

OTR goes to a lot of trouble to use symmetric, HMAC signatures to authenticate its messages, instead of asymmetric ones. However, asymmetric signatures generated using public/private keypairs would also do a perfectly good job of authentication. Why does OTR bother with symmetric ones?

The answer is that symmetric signatures help preserve deniability. They help OTR avoid the Podesta problem, in which Wikileaks used (asymmetric) DKIM signatures to prove that the stolen dump of John Podesta’s emails was legitimate.

Here’s how symmetric signatures help with deniability. Remember, since HMAC signatures are symmetric, they are both created and verified using a single shared secret key that is known to both the signer and the verifier. The signer creates the signature by passing their message and the shared secret key into (in OTR’s case) the HMAC signing algorithm. When a verifier needs to verify this signature, they do so by performing the same operation as the signer - using the same key - and making sure that their result matches the signature they were sent.

Next, suppose that an attacker completely compromises Alice’s computer. They steal a pile of messages that she has exchanged with Bob over OTR. The attacker wants to take the messages to Wikileaks and for Wikileaks to publish them. They know that Wikileaks will want cryptographic proof that the messages are real, so they also steal the messages’ HMAC signatures. Since verifying an HMAC signature requires the shared signing key, the attacker uses their complete access to Alice’s computer to steal these keys too, before Alice has a chance to wipe them from her RAM.

The attacker goes to Wikileaks and attempts to use the messages’ HMAC signatures to prove that their stolen message dump is real. For each message they pass the message’s contents and the HMAC secret into the HMAC algorithm. They demonstrate to Wikileaks that this signature matches the one in the stolen dump. For an asymmetric signature, this would be strong proof of the messages’ legitimacy.

However, for a symmetric signature it doesn’t prove anything! Since HMAC signatures are symmetric, the same key is used to both generate and verify them. Since the attacker necessarily needs to know this key in order to verify the signatures, they attacker could trivially have used the same key to forge the signatures themselves. They have no way to prove to Wikileaks that they didn’t, even if they did in fact steal them fair and square. Note that the same logic could apply for asymmetric signatures if Wikileaks suspects that the attacker might have stolen Alice’s private key and forged the signatures themselves.

This defence also works if Alice or Bob turns against the other and suddenly wants to expose their OTR communication to the world. They can publish all of the traffic, keys, signatures, and messages that they exchanged, but they can’t prove that they didn’t forge the signatures themselves. You might mostly trust your friends, but it’s still good to use safe cryptography just in case.

The key difference between the symmetric and asymmetric signature scenarios is that asymmetric signatures are generated and verified using different keys. An attacker can therefore verify stolen signatures without having been able to generate them themselves. When attempting to use symmetric signatures to verify stolen messages, the attacker has too much power for their own good.

Why is it necessary and acceptable for OTR to sign its intermediate Diffie-Hellman values using asymmetric signatures?

We’ve been rattling on about how ingenious and important it is that OTR signs its messages using a symmetric algorithm and a shared secret key. This allows OTR to provide authentication while still preserving deniability. Alice and Bob can be confident that they are talking with each other directly, while also allowing them to deny that they ever spoke in the event that their communication is compromised.

However, the only reason that they are able to trust these symmetric signatures to provide authentication is that they trust that they agreed on the signing key that produced them with the right person. If an attacker were able to manipulate their key exchange process then they might be able to trick Alice into unwittingly agreeing a shared secret with them instead of Bob. The attacker would then be able to talk to Alice while impersonating Bob.

We been talking at great length about the deniability perils of asymmetric cryptography. But at some point, if you want to be sure that you’re talking to the right person on the internet, you’re probably going to have to use asymmetric signatures and public/private keypairs. In OTR Alice and Bob ensure that they are agreeing a key with the right person by using carefully-placed asymmetric signatures. How do these particular asymmetric signatures help, and why are they safe?

As we’ve seen, Alice and Bob agree on their shared symmetric encryption key using a Diffie-Hellman key exchange process. They later take the hash of this symmetric encryption key, and use this as their symmetric signing key. Importantly, they use asymmetric signatures to prove to the other person that they are agreeing these keys with the right person.

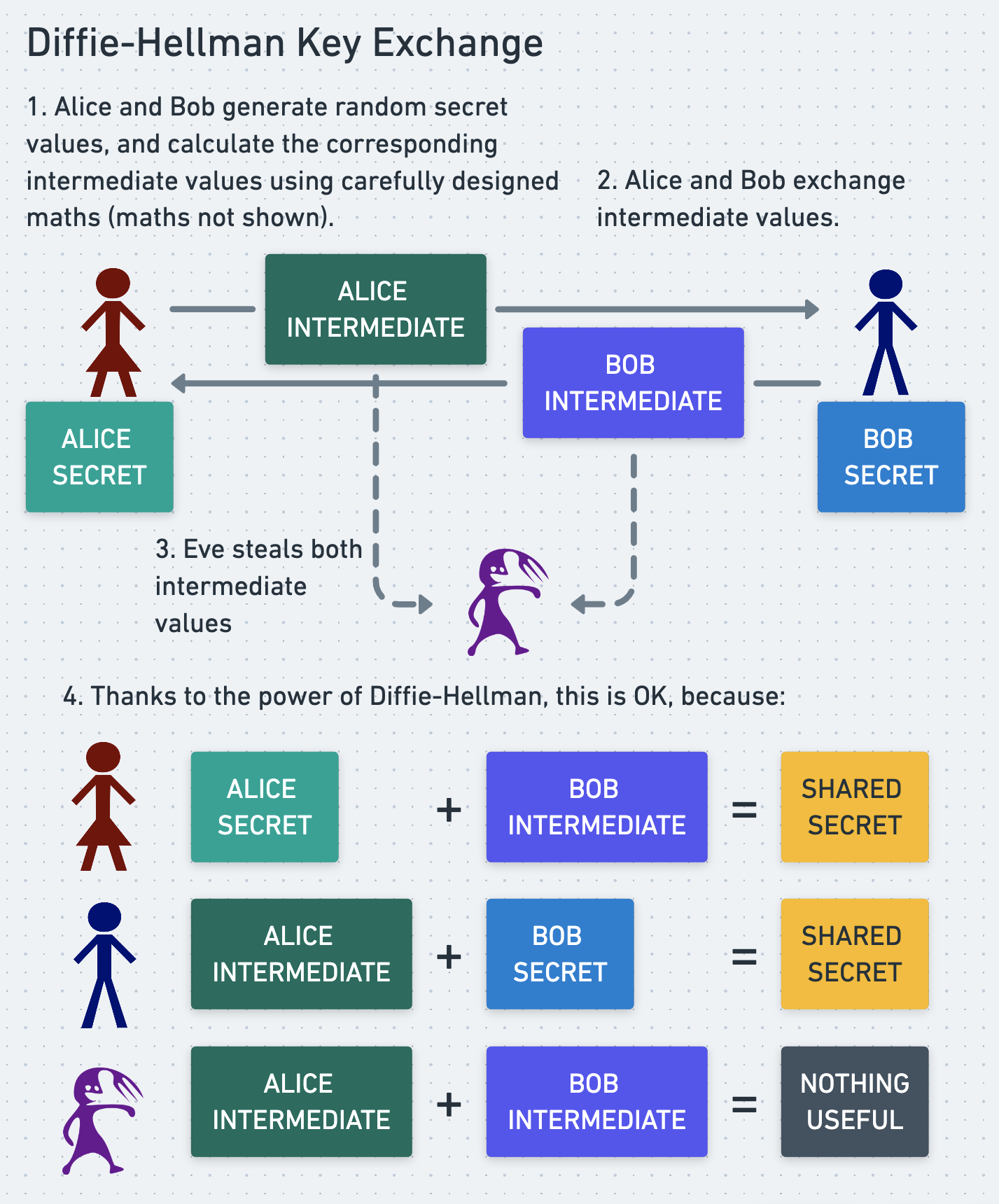

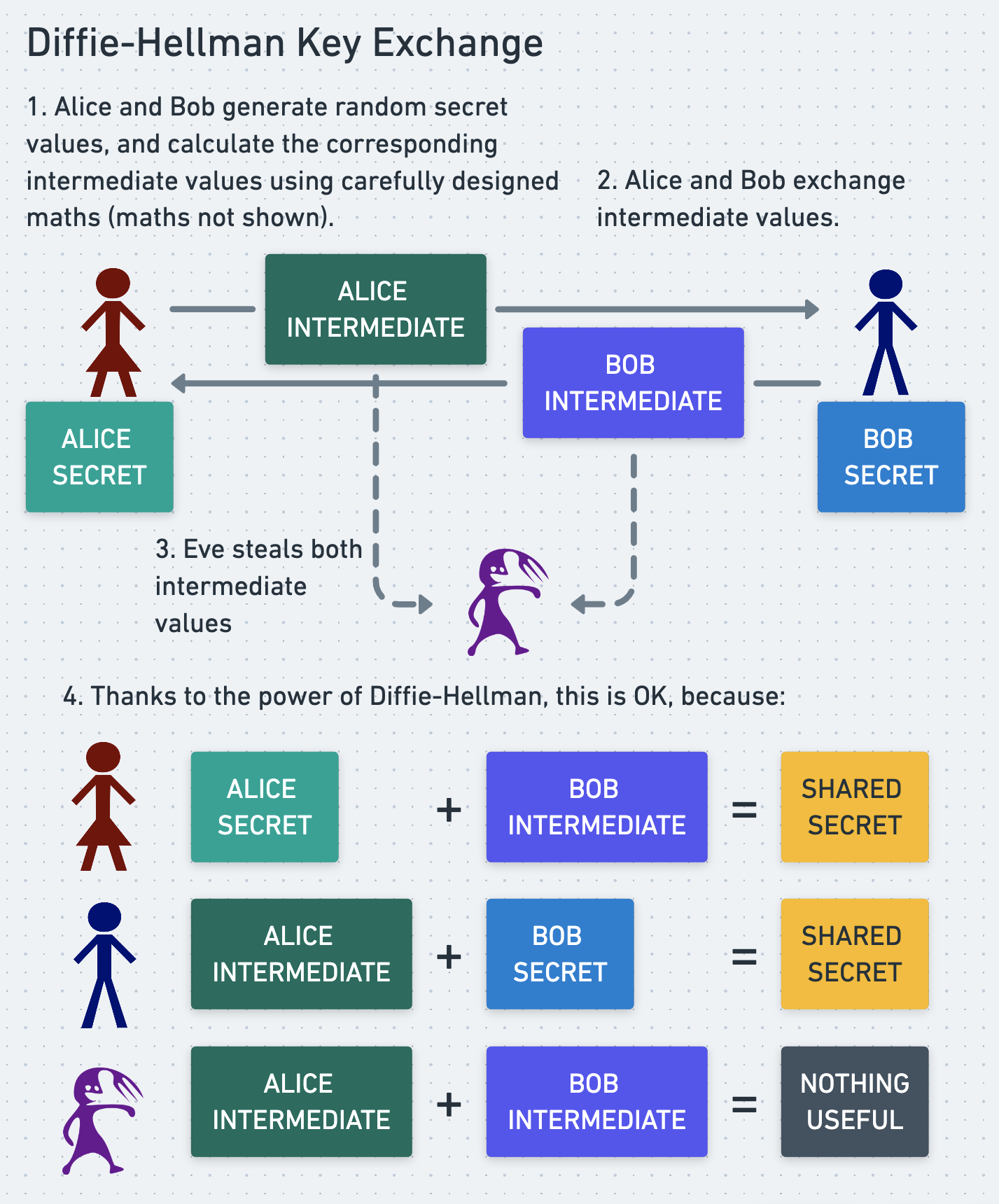

Recall that in a Diffie-Hellman key exchange, the participants each start by generating a long random number. They don’t send each other these random numbers, but instead exchange carefully selected “intermediate values”, derived from their original numbers. Thanks to some spectacular mathematics, by combining their own original number with the other party’s intermediate value, both participants can generate the same shared secret key. Just as remarkably, even if an attacker intercepts their traffic and reads both of their intermediate values, the attacker will be unable to construct the shared secret key, since they don’t know either of the original random numbers.

In order to give each other confidence that they are performing a Diffie-Hellman key exchange with the right person, Alice and Bob each sign their intermediate values using their private keys before sending them. When Alice and Bob receive the other person’s intermediate value they can verify the accompanying signature using the other person’s public key. This gives them confidence that the intermediate values were indeed generated by the right person, and therefore that they are performing a key exchange with the right person too. This means that they can trust that the shared secret derived from the key exchange is known only to them and the other person, and so any messages encrypted or signed using it and a symmetric algorithm (like HMAC) are real and unmolested.

Signing a Diffie-Hellman intermediate value with an asymmetric algorithm and a private key provides good authentication and trust in the resulting key, and doesn’t impair participants’ deniability. If an attacker intercepts the signed messages sent during key exchange then all they can prove is that Alice and Bob exchanged a few random numbers. This might help them build a circumstantial case that Alice and Bob have been exchanging covert messages, but Alice and Bob’s cryptography doesn’t mathematically incriminate them.

OTR provides strong authentication whilst preserving deniability by being very careful about what information it signs and how.

Why does the sender publish the shared HMAC signing key?

We’ve seen how HMAC signatures are used in OTR to provide “deniable authentication”. But why on earth does the sender go to the effort of publishing their shared signing key to the world once they’re done with it? The reason is similar, but subtly different, to the reason that Matthew Green called on Google to publish their DKIM signing secret keys several sections ago.

In OTR, a message signature doesn’t need to provide everlasting proof of a message’s authenticity to all people for all time. In fact, to preserve deniability, it’s desirable that an OTR siganture provides proof of a message’s validity to as few people as possible for as short a window as possible.

Only the recipient needs to be confident that an OTR message’s signature is valid, and they only need to be confident of this when they are initially receiving the message and checking its signature. Once the recipient has used a signature to authenticate a message, they can record the fact that the message was valid. They need never look at the signature again, and they need never trust it again.

This means that once a recipient has used a signature to verify a message’s validity, we want to make the signature as useless as possible to anyone who might steal it and who might want to use it to prove, or at least provide evidence, that the corresponding message is real. We want to blow the whole system up as soon as we’re finished with it.

We’ve seen that OTR signatures are already close-to-useless to attackers because they are generated using the symmetric HMAC algorithm. An attacker can’t ever use HMAC signatures to authenticate plundered messages to a sceptical third-party, because the third-party knows that the attacker could have trivially faked them. The attacker is in this predicament whether or not the participants publish their secret signing keys.

Nonetheless, the attacker can still use the HMAC signatures they’ve stolen to give themselves and their trusted accomplices additional confidence that the corresponding messages are genuine. From the attacker’s point of view, the HMAC keys are known only to Alice, Bob, and the attacker. Since the attacker knows that they didn’t fake the messages, they can be confident that they were legitimately written and signed by Alice and Bob, even though they can’t cryptographically prove this to anyone else. If the attacker has accomplices who trust them implicitly then those accomplices can be similarly confident in the messages’ veracity. For example, a court might trust an intelligence agency not to fake HMAC signatures, even though they could, and so take signatures as strong evidence of a message’s genuineness.

However, by publishing their ephemeral HMAC signing keys, Alice and Bob make it harder for the attacker to be certain that their haul is genuine. If anyone can see their ephemeral HMAC signing keys, anyone could have written and signed the messages and snuck them onto Bob’s hard drive. Admittedly, the most plausible explanation for how the messages got there is still that Alice and Bob wrote and signed them, but publishing their signing keys is still a cheap and cunning way for Alice and Bob to introduce some extra uncertainty and deniability into the mix. The attacker can’t be as certain as they used to be that their stolen messages are real, and anyone that they share the messages with has to trust not only that the attacker is being honest (as before), but also that the messages weren’t forged by a fourth-party. It’s not an “I am Spartacus” moment so much as a “she is Spartacus, or maybe he is, or perhaps she is, I don’t know, leave me alone.”

Why does OTR use a malleable encryption cipher?

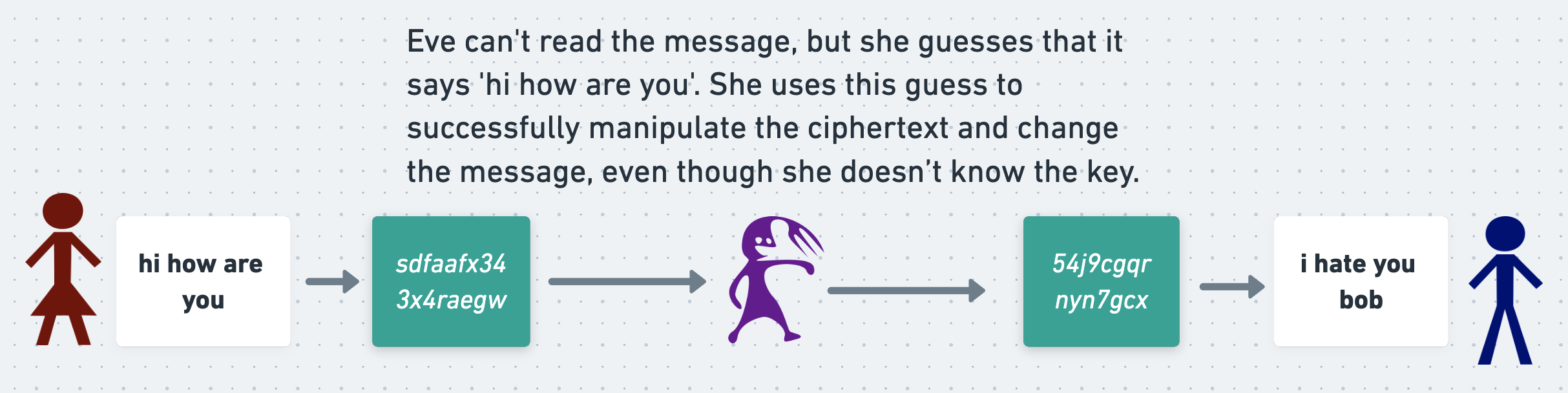

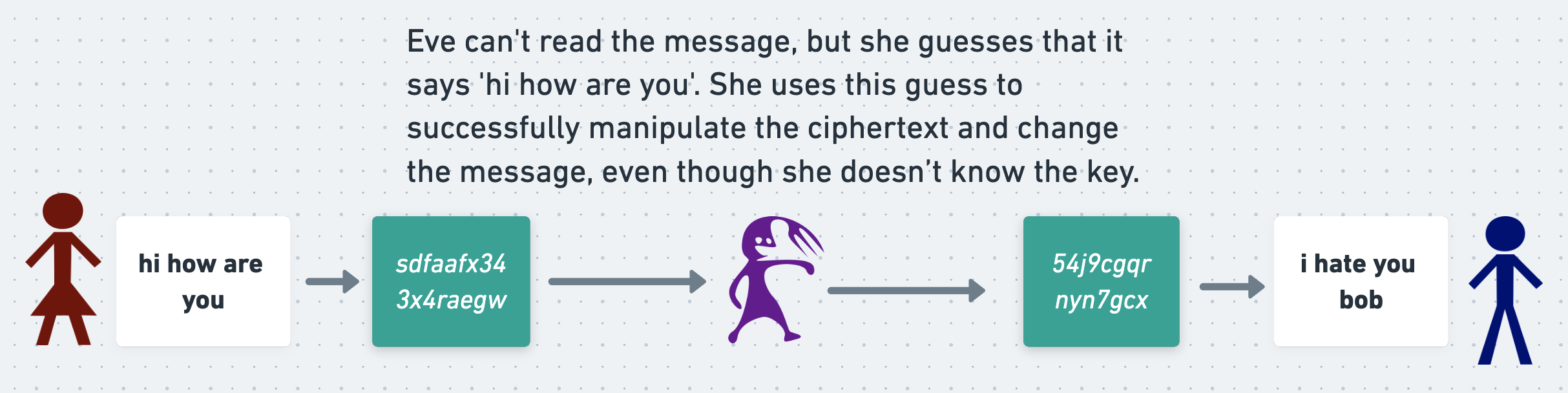

We’ve seen how OTR signatures are designed to crumble to dust when compromised by an attacker. However, it’s possible for even an unsigned ciphertext to make tricky-to-deny ties back to its author. These ties aren’t as mathematically bulletproof as a signature, but in the interests of completeness OTR tries to sever them by performing its encryption using a malleable, easy-to-tamper-with encryption cipher. How does this work?

The problem that the malleable cipher solves is that for most encryption ciphers it’s hard to produce an encrypted ciphertext that decrypts to anything meaningful if you don’t know the encryption key. It’s not quite hard enough that you can assume that any ciphertext that decrypts to a sensible plaintext must have been generated by someone with access to the secret key, but it is still very hard. This means that if a ciphertext decrypts to sensible plaintext then it’s reasonable to infer that it was probably generated by someone with access to the secret encryption key. This could give Eve good evidence that a message was sent by Alice or Bob, even without a useful signature.

To terminate this incomplete but still undesirable connection, OTR performs its encryption using a malleable stream cipher. A malleable cipher is one that makes it comparatively easy for an attacker to produce a ciphertext that decrypts to something sensible, even if they don’t know the encryption key. The attacker does this by correctly guessing the plaintext that a stolen ciphertext decrypts to. If they guess correctly, the attacker can manipulate the stolen ciphertext so that it decrypts to any message of their choice of the same length.

This tweakability gives Alice and Bob an extra layer of deniability, very similar to the one that they get from publishing their HMAC signing keys. Let’s consider a scenario in which this layer might be useful. Suppose that Alice and Bob accidentally use a weak random-number generator (RNG) when choosing their random secret values at the start of their Diffie-Hellman key exchanges. This means that Eve is able to deduce the values of their random secrets by watching their key exchange traffic. She can use this information to work out their symmetric encryption keys, and use these keys to decrypt Alice and Bob’s messages. This is already a bad outcome, but OTR’s goal in disasters like this is to mitigate the mishap and make the revealed messages as deniable as possible.

Alice and Bob signed their messages using a symmetric HMAC session key, not their private keys. This means that Eve can’t use their signatures as evidence that the messages are real. But even though Eve can’t cast-iron prove anything, she can still try to build a case on-the-balance-of-probability.

She can point out that Alice and Bob signed the intermediate values in their Diffie-Hellman key exchange using their private keys. Since they used a weak RNG to generate their secrets, Eve also knows their secret Diffie-Hellman values. She can use the asymmetric signatures on their intermediate Diffie-Hellman values to prove that Alice and Bob performed a key exchange that produced a specific symmetric session key. Eve can then use this session key to decrypt Alice and Bob’s messages, and show that this produces sensible plaintexts. She then has suggestive evidence that Alice and Bob agreed on a particular session key, and then exchanged a message that could be successfully decrypted by this key.

We’ve discussed previously how this isn’t a total deniability disaster, even without a malleable cipher. All it strictly proves is that Alice and Bob exchanged two random numbers, and there’s no law against that. Symmetrically encrypted ciphertexts, even those encrypted using a non-malleable cipher, are just as useless for proving authorship as symmetrically signed messages. If Eve is able to decrypt a symmetrically encrypted ciphertext then she must have been able to forge that ciphertext. Eve therefore can’t use Alice and Bob’s ciphertexts to prove to other people that they wrote them, because Eve could have produced the ciphertexts herself.

However, as with HMAC signatures, the fact that the ciphertexts decrypt to a sensible plaintext does give Eve herself a lot of confidence that the messages are genuine, as well as anyone else who implicitly trusts Eve. If Eve knows that the symmetric encryption key was known only to Alice, Bob, and herself, and she knows that she didn’t produce the ciphertext, then she knows that Alice or Bob must have.

To solve a similar problem with their HMAC signatures, Alice and Bob injected some extra ambiguity by publishing their HMAC signing keys after they had been used. This made it clear that anyone could have generated the signatures, not just Alice, Bob, or Eve. Eve could still assume that the signatures were probably generated by Alice or Bob, but now she also has to account for the increased possibility, however slight, that they were forged by a fourth-party.

Similarly, by using a malleable cipher, Alice and Bob make it more plausible that a ciphertext that can be legibly decrypted using their symmetric encryption key could also have been produced by a fourth-party, even if this fourth-party didn’t know the key. All that this fourth-party would have had to do is intercept one of their real encrypted messages, correctly guess its plaintext version, and exploit the malleability of the stream cipher used to generate it. This isn’t trivial, but it’s much easier than if Alice and Bob used a more robust, non-malleable cipher.

Even though stream ciphers are easier to tamper with than many other types of cipher, OTR participants are protected from their messages being secretly tampered with by their HMAC signatures. If an attacker who doesn’t know their encryption session key tampers with a message (perhaps by exploiting the malleable stream cipher as described above) then the HMAC signature will no longer be valid. The participants will know that they are under attack.

Despite these mitigations, if the attacker discovers their encryption (and therefore also their signing) keys then their privacy is still unavoidably obliterated. The attacker will be able to forge messages and signatures until the session keys expire. The best that OTR can do is to rotate session keys quickly and preserve as much deniability as possible, using all of the tools described above.

Why is the hash of the encryption key used as the signing key?

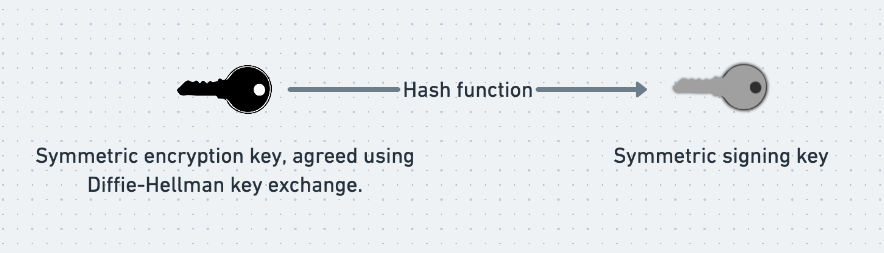

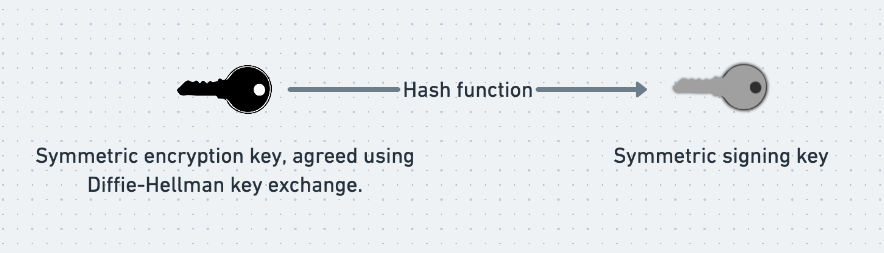

Alice and Bob use a Diffie-Hellman key exchange to agree on a symmetric encryption key. They could use a second Diffie-Hellman key exchange to agree on a symmetric signing key, but they don’t. Instead, they calculate the hash of their encryption key, and use the result as their signing key.

Linking encryption and signing keys in this way provides an interesting perk. It means that an attacker who compromises an encryption session key necessarily also compromises the corresponding signing key, whether they like it or not. All they need to do to derive the signing key is to take the hash of the stolen encryption key.

Oddly, this extra exposure benefits Alice and Bob. Suppose that an attacker is able to compromise a symmetric encryption key (perhaps becasue of a weak RNG in their key exchange) and read a message and signature. Because the signing key is just the hash of the encryption key, the attacker necessarily compromises the signing key as well. This means that it is impossible to have a situation where an attacker can decrypt a message, but can’t forge a signature for it.

This makes it even harder for an attacker to steal messages and prove their validity to a third-party. The attacker could claim that they’ve never read Borisov, Goldberg and Brewer 2004 and so they didn’t know that they could use a stolen encryption key to derive the corresponding signing key, but if they’ve got this far in their attack then this would be hard to believe.

The Principle of Most Privilege

You may have heard of the Principle of Least Privilege. This widely-applied security precept states:

Any user, program, or process should have only the bare minimum privileges necessary to perform its function.

Applying Least Privilege to your system means that an attacker who compromises one part of it won’t be able to easily pivot into new parts and powers, and will therefore only be able to do limited damage. This is why spies operate in small cells; if they are discovered and interrogated then they don’t have enough knowledge or privileges to help their captors roll up their chain of command. This is also why you shouldn’t give everyone in your company administrator access to all of your systems. You might trust all your employees without reservation, but giving a user unnecessary powers needlessly worsens the consequences if they get hacked. It’s bad if an attacker is able to read all of one person’s emails; it’s much worse if they can download everyone’s emails, delete them all, and forge new messages that they look like they came from the CEO.

Nonetheless, I would argue that OTR goes out of its way to achieve the opposite: The Principle of Most Privilege! Whereas the goal of the Principle of Least Privilege is to minimize the power gained by an attacker who compromises one part of a system, the goal of the Principle of Most Privilege is to give an attacker who compromises one part of a system total power over all of it.

To see why this is oddly desirable for OTR, consider that the worst-case situation that OTR tries to prevent at all costs is the one in which an attacker:

- Has access to an unencrypted message and a signature

- Can prove to a sceptical third-party that the signature is valid

- Could not have produced the signature themselves

This combination is a particular disaster because it means that the attacker can read Alice and Bob’s messages, and prove their legitimacy to a sceptical third-party. It’s important that the attacker can prove that a signature is valid (point 2), but couldn’t have produced the signature themselves (point 3). This proves to the third-party that the attacker didn’t forge the signatures themselves. The attacker benefits from their limitied capabilities, which is Least Privilege in action but in an oddly unhelpful way.

As we’ve seen, OTR prevents these 3 properties from occurring simultaneously by making it impossible for points 2 and 3 to be true at the same time. If an attacker can prove that a signature is valid (point 2) then they must be in possession of its symmetric HMAC key and so could have produced it themselves (the opposite of point 3). This is the Principle of Most Privilege - if you want to verify signatures, then you also have to be able to create them, whether you want this power or not. Usually we want to segment powers and credentials as much as possible; OTR is an odd situation in which we don’t.

NB: whilst I do think that the Principle of Most Privilege is a useful lens through which to view OTR, it is not a real principle and I made it up. Don’t bother Googling it.

Conclusion

We all hope that our communications stay secret and that we never get hacked. Unfortunately, sometimes we will. Any sensible encryption scheme is secure when everything goes as planned. OTR focusses on what happens when it doesn’t.

OTR provides the standard properties of encryption and authentiction, plus the more subtle ones of deniability and forward secrecy. If your keys are compromised then Diffie-Hellman key exchange keeps your past messages safe. If your signatures are stolen then a symmetric signing algorithm mean that the attacker can’t use them to prove the messages’ validity to a third-party. Even if you use a weak random-number generator for your key exchange, OTR catches you with backup layers of deniability like a deliberately malleable encryption algorithm.

Admittedly, when we say that your messages are deniable and the attacker can’t prove that you wrote them, we are using a narrow, mathematical definition of the word “prove”. No matter how many cryptography papers you cite in your defence, the attacker might still be able to convince a newspaper or a jury on the balance of probabilities. But it’s still useful to prevent your cryptography from actively working against you.

OTR weaves the strands of its protocol together in a deliberately fragile web. Signatures depend on encryption depend on key-exchanges, exploiting the Principle of Most Privilege (remember, not a real principle) to prevent an attacker from stealing signed messages that they could not have forged themselves. OTR forces us to think rigorously about what we mean by words like “authenticate” and “deny”. Authenticate to who? When?

I hope OTR has sparked your cryptographic imagination as much as it has mine.

This is the final part in a 4 part series about Off-The-Record Messaging (OTR), a cryptographic messaging protocol that protects its users’ communications even if they get hacked. In parts 1 and 2 we looked at problems with common encrypted messaging protocols, such as PGP. We considered four desirable properties of encrypted messaging protocols: confidentiality, authenticity, deniability, and forward secrecy. In part 3 we saw how OTR works and how it provides each of these properties, and in this final part we’ll delve into some of the protocol’s key insights.

Index

- Part 1: the problem with PGP

- Part 2: deniability and forward secrecy

- Part 3: how OTR works

- Part 4: OTR’s key insights

Why does OTR use symmetric signatures instead of asymmetric ones?

OTR goes to a lot of trouble to use symmetric, HMAC signatures to authenticate its messages, instead of asymmetric ones. However, asymmetric signatures generated using public/private keypairs would also do a perfectly good job of authentication. Why does OTR bother with symmetric ones?

The answer is that symmetric signatures help preserve deniability. They help OTR avoid the Podesta problem, in which Wikileaks used (asymmetric) DKIM signatures to prove that the stolen dump of John Podesta’s emails was legitimate.

Here’s how symmetric signatures help with deniability. Remember, since HMAC signatures are symmetric, they are both created and verified using a single shared secret key that is known to both the signer and the verifier. The signer creates the signature by passing their message and the shared secret key into (in OTR’s case) the HMAC signing algorithm. When a verifier needs to verify this signature, they do so by performing the same operation as the signer - using the same key - and making sure that their result matches the signature they were sent.

Next, suppose that an attacker completely compromises Alice’s computer. They steal a pile of messages that she has exchanged with Bob over OTR. The attacker wants to take the messages to Wikileaks and for Wikileaks to publish them. They know that Wikileaks will want cryptographic proof that the messages are real, so they also steal the messages’ HMAC signatures. Since verifying an HMAC signature requires the shared signing key, the attacker uses their complete access to Alice’s computer to steal these keys too, before Alice has a chance to wipe them from her RAM.

The attacker goes to Wikileaks and attempts to use the messages’ HMAC signatures to prove that their stolen message dump is real. For each message they pass the message’s contents and the HMAC secret into the HMAC algorithm. They demonstrate to Wikileaks that this signature matches the one in the stolen dump. For an asymmetric signature, this would be strong proof of the messages’ legitimacy.

However, for a symmetric signature it doesn’t prove anything! Since HMAC signatures are symmetric, the same key is used to both generate and verify them. Since the attacker necessarily needs to know this key in order to verify the signatures, they attacker could trivially have used the same key to forge the signatures themselves. They have no way to prove to Wikileaks that they didn’t, even if they did in fact steal them fair and square. Note that the same logic could apply for asymmetric signatures if Wikileaks suspects that the attacker might have stolen Alice’s private key and forged the signatures themselves.

This defence also works if Alice or Bob turns against the other and suddenly wants to expose their OTR communication to the world. They can publish all of the traffic, keys, signatures, and messages that they exchanged, but they can’t prove that they didn’t forge the signatures themselves. You might mostly trust your friends, but it’s still good to use safe cryptography just in case.

The key difference between the symmetric and asymmetric signature scenarios is that asymmetric signatures are generated and verified using different keys. An attacker can therefore verify stolen signatures without having been able to generate them themselves. When attempting to use symmetric signatures to verify stolen messages, the attacker has too much power for their own good.

Why is it necessary and acceptable for OTR to sign its intermediate Diffie-Hellman values using asymmetric signatures?

We’ve been rattling on about how ingenious and important it is that OTR signs its messages using a symmetric algorithm and a shared secret key. This allows OTR to provide authentication while still preserving deniability. Alice and Bob can be confident that they are talking with each other directly, while also allowing them to deny that they ever spoke in the event that their communication is compromised.

However, the only reason that they are able to trust these symmetric signatures to provide authentication is that they trust that they agreed on the signing key that produced them with the right person. If an attacker were able to manipulate their key exchange process then they might be able to trick Alice into unwittingly agreeing a shared secret with them instead of Bob. The attacker would then be able to talk to Alice while impersonating Bob.

We been talking at great length about the deniability perils of asymmetric cryptography. But at some point, if you want to be sure that you’re talking to the right person on the internet, you’re probably going to have to use asymmetric signatures and public/private keypairs. In OTR Alice and Bob ensure that they are agreeing a key with the right person by using carefully-placed asymmetric signatures. How do these particular asymmetric signatures help, and why are they safe?

As we’ve seen, Alice and Bob agree on their shared symmetric encryption key using a Diffie-Hellman key exchange process. They later take the hash of this symmetric encryption key, and use this as their symmetric signing key. Importantly, they use asymmetric signatures to prove to the other person that they are agreeing these keys with the right person.

Recall that in a Diffie-Hellman key exchange, the participants each start by generating a long random number. They don’t send each other these random numbers, but instead exchange carefully selected “intermediate values”, derived from their original numbers. Thanks to some spectacular mathematics, by combining their own original number with the other party’s intermediate value, both participants can generate the same shared secret key. Just as remarkably, even if an attacker intercepts their traffic and reads both of their intermediate values, the attacker will be unable to construct the shared secret key, since they don’t know either of the original random numbers.

In order to give each other confidence that they are performing a Diffie-Hellman key exchange with the right person, Alice and Bob each sign their intermediate values using their private keys before sending them. When Alice and Bob receive the other person’s intermediate value they can verify the accompanying signature using the other person’s public key. This gives them confidence that the intermediate values were indeed generated by the right person, and therefore that they are performing a key exchange with the right person too. This means that they can trust that the shared secret derived from the key exchange is known only to them and the other person, and so any messages encrypted or signed using it and a symmetric algorithm (like HMAC) are real and unmolested.

Signing a Diffie-Hellman intermediate value with an asymmetric algorithm and a private key provides good authentication and trust in the resulting key, and doesn’t impair participants’ deniability. If an attacker intercepts the signed messages sent during key exchange then all they can prove is that Alice and Bob exchanged a few random numbers. This might help them build a circumstantial case that Alice and Bob have been exchanging covert messages, but Alice and Bob’s cryptography doesn’t mathematically incriminate them.

OTR provides strong authentication whilst preserving deniability by being very careful about what information it signs and how.

Why does the sender publish the shared HMAC signing key?

We’ve seen how HMAC signatures are used in OTR to provide “deniable authentication”. But why on earth does the sender go to the effort of publishing their shared signing key to the world once they’re done with it? The reason is similar, but subtly different, to the reason that Matthew Green called on Google to publish their DKIM signing secret keys several sections ago.

In OTR, a message signature doesn’t need to provide everlasting proof of a message’s authenticity to all people for all time. In fact, to preserve deniability, it’s desirable that an OTR siganture provides proof of a message’s validity to as few people as possible for as short a window as possible.

Only the recipient needs to be confident that an OTR message’s signature is valid, and they only need to be confident of this when they are initially receiving the message and checking its signature. Once the recipient has used a signature to authenticate a message, they can record the fact that the message was valid. They need never look at the signature again, and they need never trust it again.

This means that once a recipient has used a signature to verify a message’s validity, we want to make the signature as useless as possible to anyone who might steal it and who might want to use it to prove, or at least provide evidence, that the corresponding message is real. We want to blow the whole system up as soon as we’re finished with it.

We’ve seen that OTR signatures are already close-to-useless to attackers because they are generated using the symmetric HMAC algorithm. An attacker can’t ever use HMAC signatures to authenticate plundered messages to a sceptical third-party, because the third-party knows that the attacker could have trivially faked them. The attacker is in this predicament whether or not the participants publish their secret signing keys.

Nonetheless, the attacker can still use the HMAC signatures they’ve stolen to give themselves and their trusted accomplices additional confidence that the corresponding messages are genuine. From the attacker’s point of view, the HMAC keys are known only to Alice, Bob, and the attacker. Since the attacker knows that they didn’t fake the messages, they can be confident that they were legitimately written and signed by Alice and Bob, even though they can’t cryptographically prove this to anyone else. If the attacker has accomplices who trust them implicitly then those accomplices can be similarly confident in the messages’ veracity. For example, a court might trust an intelligence agency not to fake HMAC signatures, even though they could, and so take signatures as strong evidence of a message’s genuineness.

However, by publishing their ephemeral HMAC signing keys, Alice and Bob make it harder for the attacker to be certain that their haul is genuine. If anyone can see their ephemeral HMAC signing keys, anyone could have written and signed the messages and snuck them onto Bob’s hard drive. Admittedly, the most plausible explanation for how the messages got there is still that Alice and Bob wrote and signed them, but publishing their signing keys is still a cheap and cunning way for Alice and Bob to introduce some extra uncertainty and deniability into the mix. The attacker can’t be as certain as they used to be that their stolen messages are real, and anyone that they share the messages with has to trust not only that the attacker is being honest (as before), but also that the messages weren’t forged by a fourth-party. It’s not an “I am Spartacus” moment so much as a “she is Spartacus, or maybe he is, or perhaps she is, I don’t know, leave me alone.”

Why does OTR use a malleable encryption cipher?

We’ve seen how OTR signatures are designed to crumble to dust when compromised by an attacker. However, it’s possible for even an unsigned ciphertext to make tricky-to-deny ties back to its author. These ties aren’t as mathematically bulletproof as a signature, but in the interests of completeness OTR tries to sever them by performing its encryption using a malleable, easy-to-tamper-with encryption cipher. How does this work?

The problem that the malleable cipher solves is that for most encryption ciphers it’s hard to produce an encrypted ciphertext that decrypts to anything meaningful if you don’t know the encryption key. It’s not quite hard enough that you can assume that any ciphertext that decrypts to a sensible plaintext must have been generated by someone with access to the secret key, but it is still very hard. This means that if a ciphertext decrypts to sensible plaintext then it’s reasonable to infer that it was probably generated by someone with access to the secret encryption key. This could give Eve good evidence that a message was sent by Alice or Bob, even without a useful signature.

To terminate this incomplete but still undesirable connection, OTR performs its encryption using a malleable stream cipher. A malleable cipher is one that makes it comparatively easy for an attacker to produce a ciphertext that decrypts to something sensible, even if they don’t know the encryption key. The attacker does this by correctly guessing the plaintext that a stolen ciphertext decrypts to. If they guess correctly, the attacker can manipulate the stolen ciphertext so that it decrypts to any message of their choice of the same length.

This tweakability gives Alice and Bob an extra layer of deniability, very similar to the one that they get from publishing their HMAC signing keys. Let’s consider a scenario in which this layer might be useful. Suppose that Alice and Bob accidentally use a weak random-number generator (RNG) when choosing their random secret values at the start of their Diffie-Hellman key exchanges. This means that Eve is able to deduce the values of their random secrets by watching their key exchange traffic. She can use this information to work out their symmetric encryption keys, and use these keys to decrypt Alice and Bob’s messages. This is already a bad outcome, but OTR’s goal in disasters like this is to mitigate the mishap and make the revealed messages as deniable as possible.

Alice and Bob signed their messages using a symmetric HMAC session key, not their private keys. This means that Eve can’t use their signatures as evidence that the messages are real. But even though Eve can’t cast-iron prove anything, she can still try to build a case on-the-balance-of-probability.

She can point out that Alice and Bob signed the intermediate values in their Diffie-Hellman key exchange using their private keys. Since they used a weak RNG to generate their secrets, Eve also knows their secret Diffie-Hellman values. She can use the asymmetric signatures on their intermediate Diffie-Hellman values to prove that Alice and Bob performed a key exchange that produced a specific symmetric session key. Eve can then use this session key to decrypt Alice and Bob’s messages, and show that this produces sensible plaintexts. She then has suggestive evidence that Alice and Bob agreed on a particular session key, and then exchanged a message that could be successfully decrypted by this key.

We’ve discussed previously how this isn’t a total deniability disaster, even without a malleable cipher. All it strictly proves is that Alice and Bob exchanged two random numbers, and there’s no law against that. Symmetrically encrypted ciphertexts, even those encrypted using a non-malleable cipher, are just as useless for proving authorship as symmetrically signed messages. If Eve is able to decrypt a symmetrically encrypted ciphertext then she must have been able to forge that ciphertext. Eve therefore can’t use Alice and Bob’s ciphertexts to prove to other people that they wrote them, because Eve could have produced the ciphertexts herself.

However, as with HMAC signatures, the fact that the ciphertexts decrypt to a sensible plaintext does give Eve herself a lot of confidence that the messages are genuine, as well as anyone else who implicitly trusts Eve. If Eve knows that the symmetric encryption key was known only to Alice, Bob, and herself, and she knows that she didn’t produce the ciphertext, then she knows that Alice or Bob must have.

To solve a similar problem with their HMAC signatures, Alice and Bob injected some extra ambiguity by publishing their HMAC signing keys after they had been used. This made it clear that anyone could have generated the signatures, not just Alice, Bob, or Eve. Eve could still assume that the signatures were probably generated by Alice or Bob, but now she also has to account for the increased possibility, however slight, that they were forged by a fourth-party.

Similarly, by using a malleable cipher, Alice and Bob make it more plausible that a ciphertext that can be legibly decrypted using their symmetric encryption key could also have been produced by a fourth-party, even if this fourth-party didn’t know the key. All that this fourth-party would have had to do is intercept one of their real encrypted messages, correctly guess its plaintext version, and exploit the malleability of the stream cipher used to generate it. This isn’t trivial, but it’s much easier than if Alice and Bob used a more robust, non-malleable cipher.

Even though stream ciphers are easier to tamper with than many other types of cipher, OTR participants are protected from their messages being secretly tampered with by their HMAC signatures. If an attacker who doesn’t know their encryption session key tampers with a message (perhaps by exploiting the malleable stream cipher as described above) then the HMAC signature will no longer be valid. The participants will know that they are under attack.

Despite these mitigations, if the attacker discovers their encryption (and therefore also their signing) keys then their privacy is still unavoidably obliterated. The attacker will be able to forge messages and signatures until the session keys expire. The best that OTR can do is to rotate session keys quickly and preserve as much deniability as possible, using all of the tools described above.

Why is the hash of the encryption key used as the signing key?

Alice and Bob use a Diffie-Hellman key exchange to agree on a symmetric encryption key. They could use a second Diffie-Hellman key exchange to agree on a symmetric signing key, but they don’t. Instead, they calculate the hash of their encryption key, and use the result as their signing key.

Linking encryption and signing keys in this way provides an interesting perk. It means that an attacker who compromises an encryption session key necessarily also compromises the corresponding signing key, whether they like it or not. All they need to do to derive the signing key is to take the hash of the stolen encryption key.

Oddly, this extra exposure benefits Alice and Bob. Suppose that an attacker is able to compromise a symmetric encryption key (perhaps becasue of a weak RNG in their key exchange) and read a message and signature. Because the signing key is just the hash of the encryption key, the attacker necessarily compromises the signing key as well. This means that it is impossible to have a situation where an attacker can decrypt a message, but can’t forge a signature for it.

This makes it even harder for an attacker to steal messages and prove their validity to a third-party. The attacker could claim that they’ve never read Borisov, Goldberg and Brewer 2004 and so they didn’t know that they could use a stolen encryption key to derive the corresponding signing key, but if they’ve got this far in their attack then this would be hard to believe.

The Principle of Most Privilege

You may have heard of the Principle of Least Privilege. This widely-applied security precept states:

Any user, program, or process should have only the bare minimum privileges necessary to perform its function.

Applying Least Privilege to your system means that an attacker who compromises one part of it won’t be able to easily pivot into new parts and powers, and will therefore only be able to do limited damage. This is why spies operate in small cells; if they are discovered and interrogated then they don’t have enough knowledge or privileges to help their captors roll up their chain of command. This is also why you shouldn’t give everyone in your company administrator access to all of your systems. You might trust all your employees without reservation, but giving a user unnecessary powers needlessly worsens the consequences if they get hacked. It’s bad if an attacker is able to read all of one person’s emails; it’s much worse if they can download everyone’s emails, delete them all, and forge new messages that they look like they came from the CEO.

Nonetheless, I would argue that OTR goes out of its way to achieve the opposite: The Principle of Most Privilege! Whereas the goal of the Principle of Least Privilege is to minimize the power gained by an attacker who compromises one part of a system, the goal of the Principle of Most Privilege is to give an attacker who compromises one part of a system total power over all of it.

To see why this is oddly desirable for OTR, consider that the worst-case situation that OTR tries to prevent at all costs is the one in which an attacker:

- Has access to an unencrypted message and a signature

- Can prove to a sceptical third-party that the signature is valid

- Could not have produced the signature themselves

This combination is a particular disaster because it means that the attacker can read Alice and Bob’s messages, and prove their legitimacy to a sceptical third-party. It’s important that the attacker can prove that a signature is valid (point 2), but couldn’t have produced the signature themselves (point 3). This proves to the third-party that the attacker didn’t forge the signatures themselves. The attacker benefits from their limitied capabilities, which is Least Privilege in action but in an oddly unhelpful way.

As we’ve seen, OTR prevents these 3 properties from occurring simultaneously by making it impossible for points 2 and 3 to be true at the same time. If an attacker can prove that a signature is valid (point 2) then they must be in possession of its symmetric HMAC key and so could have produced it themselves (the opposite of point 3). This is the Principle of Most Privilege - if you want to verify signatures, then you also have to be able to create them, whether you want this power or not. Usually we want to segment powers and credentials as much as possible; OTR is an odd situation in which we don’t.

NB: whilst I do think that the Principle of Most Privilege is a useful lens through which to view OTR, it is not a real principle and I made it up. Don’t bother Googling it.

Conclusion

We all hope that our communications stay secret and that we never get hacked. Unfortunately, sometimes we will. Any sensible encryption scheme is secure when everything goes as planned. OTR focusses on what happens when it doesn’t.

OTR provides the standard properties of encryption and authentiction, plus the more subtle ones of deniability and forward secrecy. If your keys are compromised then Diffie-Hellman key exchange keeps your past messages safe. If your signatures are stolen then a symmetric signing algorithm mean that the attacker can’t use them to prove the messages’ validity to a third-party. Even if you use a weak random-number generator for your key exchange, OTR catches you with backup layers of deniability like a deliberately malleable encryption algorithm.

Admittedly, when we say that your messages are deniable and the attacker can’t prove that you wrote them, we are using a narrow, mathematical definition of the word “prove”. No matter how many cryptography papers you cite in your defence, the attacker might still be able to convince a newspaper or a jury on the balance of probabilities. But it’s still useful to prevent your cryptography from actively working against you.

OTR weaves the strands of its protocol together in a deliberately fragile web. Signatures depend on encryption depend on key-exchanges, exploiting the Principle of Most Privilege (remember, not a real principle) to prevent an attacker from stealing signed messages that they could not have forged themselves. OTR forces us to think rigorously about what we mean by words like “authenticate” and “deny”. Authenticate to who? When?

I hope OTR has sparked your cryptographic imagination as much as it has mine.